TL;DR: Several file-based embedded database wrappers are available for Rust. This article examines some of them and demonstrates how to incorporate them into an application written with Tauri and Rust.

This is the second post on porting UMLBoard to Tauri. While the previous article focused on interprocess communication, this time, we will examine how we could implement the local data storage subsystem with Rust.

-

Porting inter-process communication to Tauri(see last post) - Accessing a document-based local data store with Rust (this post!)

- Validate the SVG compatibility of different Webview

- Check if Rust has a library for automatic graph layouting

To achieve this, we will first look at some available embedded datastore options for Rust and then see how to integrate them into the prototype we created in our last post.

But before we start coding, let’s glimpse at the current status quo:

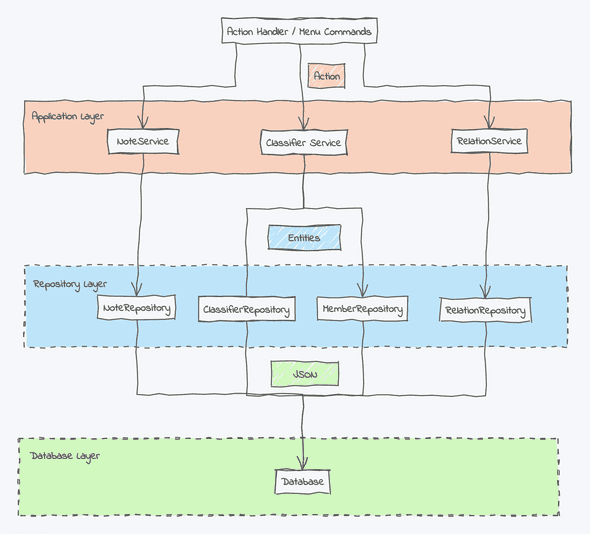

Basic Architecture

The current, Electron-based UMLBoard main process uses a layered architecture split into several application services. Each service accesses the database through a repository for reading and writing user diagrams. The datalayer uses nedb, (or better, its fork @seald-io/nedb), an embedded, single-file JSON database.

This design allows for a better separation between application code and database-specific implementations.

Repositories are used to separate the application logic from the persistence layer.

Porting from Typescript to Rust

To port this architecture to Rust, we must reimplement each layer individually.

We will split this up into four subtasks:

- Find a file-based database implementation in Rust.

- Implement a repository layer between the database and our services.

- Connect the repository with our business logic.

- Integrate everything into our Tauri application.

Following the strategy from our last post, we will go through each step one by one.

1. Finding a suitable file-based database implementation in Rust.

There are numerous Rust wrappers for both SQL and NoSQL databases. Let’s look at some of them.

(Please note this list is by no means complete, so if you think I missed an important one, let me know, and I can add it here.)

1. unqlite

A Rust wrapper for the UnQLite database engine. It looks quite powerful but is not actively developed anymore — the last commit was a few years ago.

2. PoloDB

A lightweight embedded JSON database. While it’s in active development, at the time of writing this (Spring 2023), it doesn’t yet support asynchronous data access — not a huge drawback, given that we’re only accessing local files, but let’s see what else we have.

3. Diesel

The de-facto SQL ORM and query builder for Rust.

Supports several SQL databases, including SQLite — unfortunately, the SQLite driver does not yet support asynchronous operations1.

4. SeaORM

Another SQL ORM for Rust with support for SQLite and asynchronous data access, making it a good fit for our needs. Yet, while trying to implement a prototype repository, I realized that defining a generic repository for SeaORM can become quite complex due to the number of required type arguments and constraints.

5. BonsaiDB

A document-based database, currently in alpha but actively developed. Supports local data storage and asynchronous access.

Also provides the possibility to implement more complex queries through Views.

6. SurrealDB

A wrapper for the SurrealDB database engine. Supports local data storage via RocksDB and asynchronous operations.

Among these options, BonsaiDB and SurrealDB look most promising:

They support asynchronous data access, don’t require a separate process, and have a relatively easy-to-use API.

So, let’s try to integrate both of them into our application.

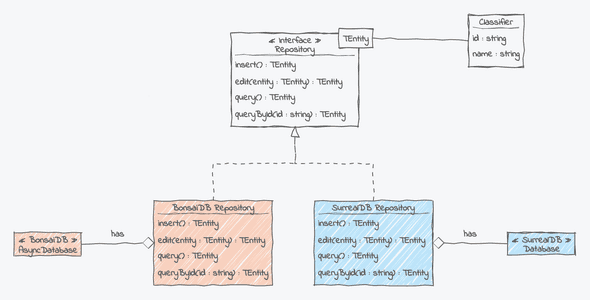

2. Implementing a Repository Layer in Rust

Since we want to test two different database engines, the repository pattern looks like a good option as it allows us to decouple the database from the application logic. In that way, we can easily switch the underlying database system.

Defining our repository’s behavior in Rust is best achieved with a trait. For our proof-of-concept, some default CRUD operations will be sufficient:

// Trait describing the common behavior of

// a repository. TEntity is the type of

// domain entity handled by this repository.

pub trait Repository<TEntity> {

fn query_all(&self) -> Vec<TEntity>;

fn query_by_id(&self, id: &str) -> Option<TEntity>;

fn insert(&self, data: TEntity) -> TEntity;

fn edit(&self, id: &str, data: TEntity) -> Option<TEntity>;

}

Our repository is generic over its entity type, allowing us to reuse our implementation for different entities. We need one implementation of this trait for every database engine we want to support.

Let’s see what the implementation will look like for BonsaiDB and SurrealDB.

BonsaiDB

First, we declare a struct BonsaiRepository that holds a reference to BonsaiDB’s AsyncDatabase object we need to interact with our DB.

pub struct BonsaiRepository<'a, TData> {

// gives access to a BonsaiDB database

db: &'a AsyncDatabase,

// required as generic type is not (yet) used in the struct

phantom: PhantomData<TData>

}

Our struct has a generic argument, so we can already specify the entity type upon instance creation.

However, since the compiler complains that the type is not used in the struct, we must define a phantom field to suppress this error2.

But most importantly, we need to implement the Repository trait for our struct:

// Repository implementation for BonsaiDB database

#[async_trait]

impl <'a, TData> Repository<TData> for BonsaiRepository<'a, TData>

// bounds are necessary to comply with BonsaiDB API

where TData: SerializedCollection<Contents = TData> +

Collection<PrimaryKey = String> + 'static + Unpin {

async fn query_all(&self) -> Vec<TData> {

let docs = TData::all_async(self.db).await.unwrap();

let entities: Vec<_> = docs.into_iter().map(|f| f.contents).collect();

entities

}

// note that id is not required here, as already part of data

async fn insert(&self, data: TData, id: &str) -> TData {

let new_doc = data.push_into_async(self.db).await.unwrap();

new_doc.contents

}

async fn edit(&self, id: &str, data: TData) -> TData {

let doc = TData::overwrite_async(id, data, self.db).await.unwrap();

doc.contents

}

async fn query_by_id(&self, id: &str) -> Option<TData> {

let doc = TData::get_async(id, self.db).await.unwrap().unwrap();

Some(doc.contents)

}

}

Rust doesn’t yet support asynchronous trait functions, so we must use the async_trait here3. Our generic type also needs a constraint to signal Rust that we are working with BonsaiDB entities. These entities consist of a header (which contains meta-data like the id) and the content (holding the domain data). We’re going to handle the ids ourselves, so we only need the content object.

Please also note that I skipped error handling for brevity throughout the prototype.

SurrealDB

Our SurrealDB implementation works similarly, but this time, we must also provide the database table name as SurrealDB requires it as part of the primary key.

pub struct SurrealRepository<'a, TData> {

// reference to SurrealDB's Database object

db: &'a Surreal<Db>,

// required as generic type not used

phantom: PhantomData<TData>,

// this is needed by SurrealDB API to identify objects

table_name: &'static str

}

Again, our trait implementation mainly wraps the underlaying database API:

// Repository implementation for SurrealDB database

#[async_trait]

impl <'a, TData> Repository<TData> for SurrealRepository<'a, TData>

where TData: Sync + Send + DeserializeOwned + Serialize {

async fn query_all(&self) -> Vec<TData> {

let entities: Vec<TData> = self.db.select(self.table_name).await.unwrap();

entities

}

// here we need the id, although its already stored in the data

async fn insert(&self, data: TData, id: &str) -> TData {

let created = self.db.create((self.table_name, id))

.content(data).await.unwrap();

created

}

async fn edit(&self, id: &str, data: TData) -> TData {

let updated = self.db.update((self.table_name, id))

.content(data).await.unwrap();

updated

}

async fn query_by_id(&self, id: &str) -> Option<TData> {

let entity = self.db.select((self.table_name, id)).await.unwrap();

entity

}

}

To complete our repository implementation, we need one last thing:

An entity we can store in the database. For this, we use a simplified version of a widespread UML domain type, a Classifier.

This is a general type in UML used to describe concepts like a Class, Interface, or Datatype4.

Our Classifier struct contains some typical domain properties, but also an _id field which serves as primary key.

#[derive(Debug, Serialize, Deserialize, Default, Collection)]

#[collection( // custom key definition for BonsaiDB

name="classifiers",

primary_key = String,

natural_id = |classifier: &Classifier| Some(classifier._id.clone()))]

pub struct Classifier {

pub _id: String,

pub name: String,

pub position: Point,

pub is_interface: bool,

pub custom_dimension: Option<Dimension>

}

To tell BonsaiDB that we manage entity ids through the _id field, we need to decorate our type with an additional macro.

While having our own ids requires a bit more work on our side, it helps us to abstract database-specific implementations away and makes adding new database engines easier.

3. Connect the repository with our business logic

To connect the database backend with our application logic, we inject the Repository trait into the ClassifierService and narrow the type argument to Classifier.

The actual implementation type of the repository (and thus, its size) is not known at compile time, so we have to use the dyn keyword in the declaration.

// classifier service holding a typed repository

pub struct ClassifierService {

// constraints required by Tauri to support multi threading

repository : Box<dyn Repository<Classifier> + Send + Sync>

}

impl ClassifierService {

pub fn new(repository: Box<dyn Repository<Classifier> + Send + Sync>) -> Self {

Self { repository }

}

}

Since this is only for demonstration purposes, our service will delegate most of the work to the repository without any sophisticated business logic. For managing our entities’ primary keys, we rely on the uuid crate to generate unique ids.

The following snippet contains only an excerpt, for the complete implementation, please see the Github repository.

impl ClassifierService {

pub async fn create_new_classifier(&self, new_name: &str) -> Classifier {

// we have to manage the ids on our own, so create a new one here

let id = Uuid::new_v4().to_string();

let new_classifier = self.repository.insert(Classifier{

_id: id.to_string(),

name: new_name.to_string(),

is_interface: false,

..Default::default()

}, &id).await;

new_classifier

}

pub async fn update_classifier_name(

&self, id: &str, new_name: &str) -> Classifier {

let mut classifier = self.repository.query_by_id(id).await.unwrap();

classifier.name = new_name.to_string();

// we need to copy the id because "edit" owns the containing struct

let id = classifier._id.clone();

let updated = self.repository.edit(&id, classifier).await;

updated

}

}

4. Integrate everything into our Tauri application

Let’s move on to the last part, where we will assemble everything together to get our application up and running.

For this prototype, we will focus only on two simple use cases:

(1) On application startup, the main process sends all available classifiers to the webview (a new classifier will automatically be created if none exists), and

(2) editing the classifier’s name via the edit field will update the entity in the database.

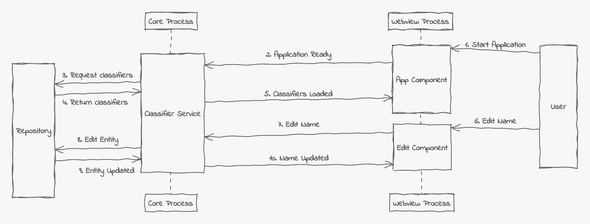

See the following diagram for the complete workflow: